Norconex HTTP Collector 2.3.0 / 2.4.0-20160209 Snapshot | 126.4 Mb

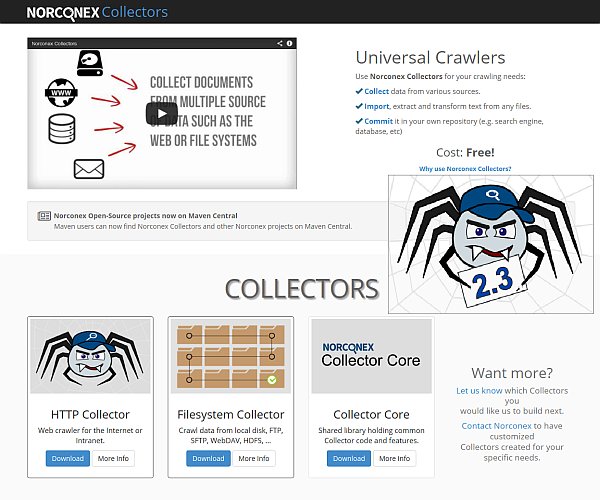

Search engines allow the Internet to be interpreted in a meaningful way, as otherwise one would have to waste a lot of time finding information. As essential tools when surfing online, developers have been constantly preoccupied with improving these utilities. Norconex HTTP Collector is one such auxiliary tool that can be employed to crawl sites quickly and return results to a local folder or feed them directly to a search engine.

The application supports multi-threaded operations, thus ensuring that adequate results are received with little time being wasted. This ability can be especially useful when dealing with particularly large websites.

Once a target has been specified, the program automatically attempts to detect the language and text can be extracted from all the attached pictures and PDFs, as the library has support for OCR tasks.

Other formats, such as HTMLs and Office documents are supported and the spider can also process canonical URLs.

Several settings can be customized when starting jobs, such as the ability to adjust the crawling speed; also, one can configure the crawler to treat embedded documents as distinct files and hierarchical fields can also be built.

Filtering output documents can be performed based on URL or HTTP headers and metadata information can also be employed towards this end.

For ease of use, several samples are available, allowing developers or users to assess the power of the tool accurately.

A concise online manual can be perused to solve many issues and the forums can also be employed to ensure one obtains good results.

FEATURES

⢠Multi-threaded.

⢠Supports different hit interval according to different schedules.

⢠Can crawl millions on a single server of average capacity.

⢠Extract text out of many file formats (HTML, PDF, Word, etc.)

⢠Extract metadata associated with documents.

⢠Language detection.

⢠Many content and metadata manipulation options.

⢠OCR support on images and PDFs.

⢠Translation support.

⢠Dynamic title generation.

⢠Configurable crawling speed.

⢠Offers URL normalization.

⢠Detects modified and deleted documents.

⢠Supports various website authentication schemes.

⢠Supports sitemap.xml and robot rules.

⢠Supports canonical URLs.

⢠Can filter documents based on URL, HTTP headers, content, or metadata.

⢠Can treat embedded documents as distinct documents.

⢠Can split a formatted document into multiple documents.

⢠Can store crawled URLs in different database engines.

⢠Can re-process or delete URLs no longer linked by other crawled pages.

⢠Supports different URL extraction strategies for different content types.

⢠Fires more than 20 crawler event types for custom event listeners.

⢠Date parsers/formatters to match your source/target repository dates.

⢠Can create hierarchical fields.New in version 2.3.0

⢠GenericHttpClientFactory now allows you to set HTTP request headers on every HTTP calls a crawler will make.

⢠New crawler configuration options: stayOnProtocol, stayOnDomain, and stayOnPort. These new settings can be applied as attributes to the startURLs tag in an XML configuration, or on the object returned by HttpCrawlerConfig#getURLCrawlScopeStrategy(). This addition affects or replace previous implementations discussed in #138 , #135 , #131 , #17 , and possibly others.

⢠It is now possible to specify one or more sitemap URLs as “start URLs”. New HttpCrawlerConfig#[set|get]StartSitemapURLs(…) methods.

⢠GenericURLNormalizer now has the few normalizations by default, as described in its Javadoc.

⢠New StandardSitemapFactory#setPaths(…) method to specify where to look for sitemap files for each URLs processed (relative to URL root).

⢠The “sitemap” tag used to set the ISitemapResolverFactory implementation has been renamed to “sitemapResolverFactory” to avoid confusion with the “sitemap” tag that can now be set as a start URL.

⢠URL normalization now always takes place by default, using GenericURLNormalizer. Can be turned off by either setting it to null in the crawler configuration, or invoking GenericURLNormalizer#setDisabled(true).

⢠URLs extracted from a document are now stored in “collector.referenced-urls” after they have been normalized.

⢠URL redirects are now logged as REJECTED_REDIRECTED (log level INFO).

⢠HtmlLinkExtractor has been deprecated in favor of GenericLinkExtractor.

⢠HttpCrawlerConfig#[set|get]UrlsFiles(…) has been deprecated in favor of HttpCrawlerConfig#[set|get]StartUrlsFiles(…)

⢠StandardSitemapFactory#setLocations(…) now deprecated in favor of being able to specify sitemaps as start URLs.

⢠ISitemapResolver#resolveSitemaps(…) as a new argument to specify whether the sitemap locations provided were defined as “start URLs” or not.

⢠Now logs User-Agent upon startup (log level INFO).

⢠Maven dependency updates: Norconex Collector Core 1.3.0, Norconex Commons Lang 1.8.0.

⢠Added new event types loggers to log4j.properties.

⢠Corrected typos and improved documentation.

⢠Saved and loaded configuration-related classes are now equal. Methods equals/hashCode/toString for those classes are now implemented uniformly and where added where missing.

⢠Fixed some configuration classes not always being saved to XML properly or giving errors.

⢠Relative redirect URLs are now converted to absolute.

⢠Fixed robots.txt being fetched before reference filters were executed. Robots.txt are no longer fetched for rejected reference.

⢠GenericLinkExtractor now unescapes HTML entities in URLs.

⢠Fixed ClassCastException in HttpCrawlerRedirectStrategy when using an HTTP Proxy.

⢠GenericCanonicalLinkDetector now supports links that are escaped (HTML-entities). They are now unescaped before they are processed.

⢠Fixed circular redirect exception.

⢠GenericLinkExtractor and TikaLinkExtractor now extract meta http-equiv refresh properly when “refresh” is without quotes or not lowercase.

⢠Fixed duplicate commits when multiple URL redirects are pointing to the same target URL.

⢠Fixed possible URISyntaxException in GenericURLNormalizer (fixed by updated Norconex Commons Lang URLNormalizer dependency).SYSTEM REQUIREMENTS

⢠JAVA JRE

⢠Internet connectionOS: Windows Vista / 7 / 8 / 10 (32/64-bit)

Download Via RapidGator

http://rapidgator.net/file/650af5bd9bbb1f79e98b112e43a3006b/Norconex.HTTP.Collector.2.3.0.and.2.4.0.Snapshot.rar.html

Download Via UploadedNet

http://ul.to/xwqss0tk/Norconex.HTTP.Collector.2.3.0.and.2.4.0.Snapshot.rar